Author: Michel Leidson

Abstract:

Following the considerations of the performance management proposal PIP-4 we propose an improvement in monitoring for validator nodes.

Motivation:

One of the criteria to be a performant validator is to keep the node with a high uptime. The performance of the validator is based on checkpoint production and attestation on Heimdall and block production and attestation on Bor. Any reduction in execution in this respect degrades network health. In addition to not receiving the rewards, validators lose performance and gain a bad reputation. In the current state that node monitoring is in, we can only mitigate failures later.

Benefits:

Monitoring allows control to anticipate instabilities that occur in the node, where these failures directly impact the low performance of the validator. So, with this information in hand, you can correct it, reducing downtime and avoiding checkpoint missed.

Architecture:

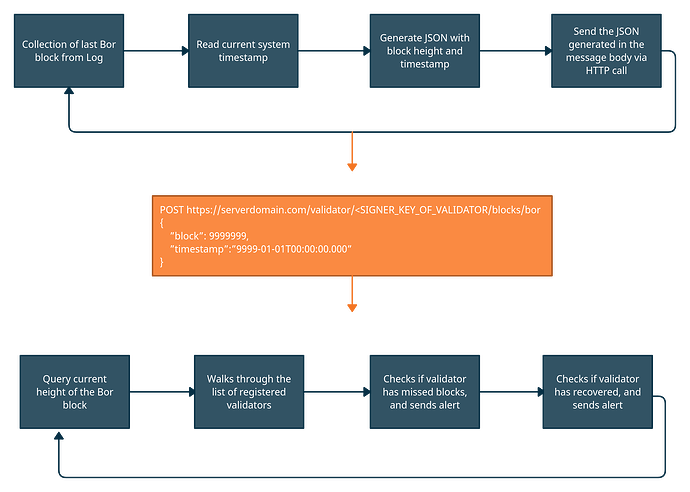

Designed with Client-Server architecture, where the client application is located on the validator side, collecting the blocks in a log file and sending them to the server application, receiving the information and persisting the data to generate alert messages based on the signature performance of validators bor blocks.

Applications present in the system

1 – Client Application (Log information collection, and sending via HTTP call)

2 – Server Application (Receives information from validators, persists and generates alert messages)

Provide alert on deficient status:

-

Missing Block Monitoring (“MBM”): Occurs when a Validator fails to sign blocks.

-

Low Block Performance (“LBP”): May arise in situations where the node cannot keep up with the current height of the network.

Specification

Monitoring should consist of two parts: the PoS node known as Heimdall and the EVM node called Bor. That said, for us to have Bor in full working order, the intervention of the validator side is necessary.

Client Application Script

#!/bin/bash

source /etc/cbbc/config

VAR=$(echo $SIGNER_KEY | awk '{ print tolower($SIGNER_KEY) }')

COMPLETE_API_URL="$API_URL/validator/$VAR/blocks/bor"

if [ -z $API_URL ] || [ -z $FILE_PATH ] || [ -z $SIGNER_KEY ]

then

echo "Set yours variables in /etc/cbbc/config file!"

if [ -z $API_URL ]

then

echo -e "Variable API_URL is empty!"

fi

if [ -z $FILE_PATH ]

then

echo -e "Variable FILE_PATH is empty!"

fi

if [ -z $SIGNER_KEY ]

then

echo -e "Variable SIGNER_KEY is empty!"

fi

else

while true; do

BLOCK=$(tail -n 1000 $FILE_PATH | grep "Imported new chain segment" | tail -1 | awk

-F'number=' '{ print $2 }' |

awk -F' ' '{ print $1 }' | sed -e 's/,//g')

TIMESTAMP=$(date +'%Y-%m-%dT%H:%M:%S.%N')

JSON='{ "block": '$BLOCK' ,"timestamp":"'$TIMESTAMP'" }'

if [ -z $BLOCK ]

then

echo -e "Not found block in log file: $FILE_PATH \n"

else

echo -e "Block collected $BLOCK from file $FILE_PATH\n"

echo -e "Request: Send JSON to API $COMPLETE_API_URL\n$JSON\n"

echo "Response: "

curl -X POST "$COMPLETE_API_URL" -d "{ \"block\" : "$BLOCK" , \"timestamp\" :

\""$TIMESTAMP"\" }" -H

'Content-Type: application/json'

echo -e "\n"

fi

sleep 2;

done

fi

You can access the repository through the link:

GitHub - Michel-Leidson/collect-bor-blocks-client

The first step of the application is to read the configuration file where the API_URL, FILE_PATH and SIGNER_KEY variables are obtained, in the file located by default in /etc/cbbc/config. Right after reading the file, all variables necessary for execution are validated, and if any have not been defined, the error stating which environment variable is missing is criticized. After loading the variables, the script collects information regarding the height of the block, saving it in the BLOCK variable. Right after this collection, the block collection date and time is also saved through the “date” command in the TIMESTAMP variable. Once all the necessary information is collected, the “curl” is used to send, in the body of an HTTP request with the POST method, the JSON with the block information of the date and time of collection, as in the example below:

POST https://server-domain.com/validator/<SIGNER_KEY_OF_VALIDATOR/blocks/bor

{

"block": 9999999,

"timestamp":"9999-01-01T00:00:00.000"

}

FAQ:

1 - Which notification channels will the monitoring of blocks be implemented?

Telegram and Discord.

2 - Are there risks for my validator?

There are no risks. Today the metrics are sent to a central instance using a subscription mechanism where the information collection takes place for the bor block.

Note: In a future version, if approved, the monitoring of bor blocks in real time will be implemented through: https://monitor.stakepool.dev.br/