From my perspective, the most frustrating element of the reorgs is that in the majority of situations they are detectable before they happen. It is my view that this shouldn’t be the case - if I can predict a reorg before it happens, that means the nodes should be able to as well.

Most reorgs are caused from a scenario like this:

(EDIT: note that validator sprints are 64 blocks long. Whichever chain - the backup or the primary - makes it to block 64 first and is used by the primary of the next sprint is the main chain.)

- Validator A suffers some sort of failure at the start of their sprint and are unable to propagate blocks.

- Validator B is validator A’s backup. After 2s go by with no block from validator A, validator B makes the backup block. Then another 2s go by and validator b makes another backup block. Validator B’s blocktime is 4s because validator B has to wait and make sure it doesnt receive a block from validator A before it starts on its own. Let’s say for the sake of this example, validator B makes 10 ‘backup’ blocks and it takes them 40s to do so.

- Validator A comes back online and starts making their own blocks. Validator A does not accept any of validator B’s blocks

note- if validator B isn’t past block 32 at this point then I know there will be a reorg… it just hasn’t happened yet.

- Another 20 seconds go by. During those 20s, validator B has made an additional 5 blocks (because its blockTime is 4s: 20/4=5) and they are now on block 15. Validator A has made 10 blocks during those 20 seconds (its blocktime is 2s due to being the primary. 20/2=10).

- Another 20 seconds go by. Validator B made another 5 blocks, and validator A made another 10 blocks. They’ve now each made a total of 20 blocks. As soon as the block number from validator A reaches or exceeds the block number for validator B, validator A’s ‘chain’ becomes the primary chain. Everyone’s node will now recognize validator A’s chain as the main one and everyone will experience a 20 block reorg as the 20 blocks we received from validator B are ‘undone’ and replaced with the 20 received from validator A.

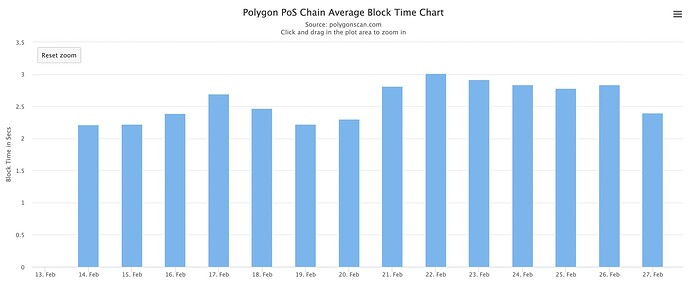

Would a 5s blocktime decrease the number of reorgs? Well… only if the initial ‘freeze’ / ‘hang’ time of validator A was less than 10s. That’s because with 5s blocktimes, the backup blocktime would be 10s and so validator A would come out of the freeze and release block 1 around the same time as validator B’s block 1 and it would only overwrite the top block (in other words, validator B wouldn’t have released a second block prior to validator A’s first block). (backup: 15/10=1.5 round to 1) (primary: (15-10)/5=1

Is this common? well we can tell the duration of the average validator reorg-causing hang/freeze by looking at how many blocks get reorged. In the current system, a 10s hang would mean the backup validator has propagated 4 blocks by the time the primary catches up (at 18s, backup has 4 (18/4=4.5, round to 4) and primary has 4 ((18-10)/2=4)

So how many reorgs would this change prevent?

go here and take a look:

https://polygonscan.com/blocks_forked

While I am against increasing the blocktime, I am 100% on board with increasing the wiggle time. Wiggle time is how long the backup must wait on the primary before it can start making its own block.

What i’d like to see is an incrementally decreasing wiggle time based on how many blocks into the sprint we are. For example, if we are on the first block of the sprint then it will be very easy for the primary to catch up and reorg the backup… but if we’re towards the end of the sprint then it will be quite difficult as the primary has less time to catch up to the backup.

As an extreme example: imagine a scenario where the wiggle time was 10s for the first block, 8s for the second, 6s for the third… etc until you get to 0s for the 6th. From how I understand it (and i am not by any means an expert on consensus here, so if this is wrong someone please correct me), this would have a significant impact on the depth of the reorgs. If the backup block makes it to the 6th block before the primary recovers then there is no chance that the primary could then pass the backup since both will now have 2s blocktimes. Before then, however, the primary would have 12 + 10 + 8 + 6 + 4 + 2 = 42s to make 6 blocks, meaning that with 2s blocktimes it could have a hang/freeze for a max of 30s (42 - (12/2)).

I like this scheme because it lessens the depth of the reorgs at all levels and removes the possibility for small reorgs (it’s even more effective than the 5s blocktime/wiggletime for preventing small reorgs) and it prevents large reorgs as well.

Consensus would have to be changed and i’m sure there might be some ways to ‘game’ the system that would need to be accounted for… we’d also need to make sure the wiggle room’s incremental decrease amount gets reset back to 12s whenever the backup receives a block from the primary that matches their blocknumber (to prevent issues if the primary is slightly late half way through and the backup immediately taking over).

I haven’t really modelled this out yet - it’s just kind of an idea that’s been bouncing around in my head. But I think it would help the chain far more in every measurable metric than the 5s blocktime proposal would. Its only downside is the possibility for 12s / 10s blocks in the beginning of a sprint… but youd be able to rest comfortable knowing that if it was a 4s block it’d probably get reorged anyway (and the reorg’d replacement block would probably be more equivalent to a 30s block lol). I bet someone else could probably come up with an even better version of this concept.

But yeah - i’d love to model this out. This isn’t related to the tx propagation stuff i’m working on. It would be fun to do.