Pre-PIP Discussion: Addressing Reorgs and Gas Spikes

Changes in BaseFeeChangeDenominator and SprintLength

Note: The below poll serves only to gauge general sentiment towards the changes and is by no means a binding vote concerning the hardfork’s contents or implementation.

The poll is one of the feedback tools employed, such as the discussion thread and the Polygon Builders Session, each addressing a different audience, i.e., validators, general community, and core infrastructure developers. A hardfork on Polygon is reached in a decentralized manner via rough consensus of two-thirds of $MATIC stake validating the network.

As of January 18th, all active validators have executed the Delhi hardfork, with 3.5 billion staked $MATIC (including delegations) now validating the chain’s upgraded version.

For more information on how upgrades are performed, please refer to the Polygon documentation.

- Decreasing

SprintLengthto 16 blocks from 64 and increasingBaseFeeChangeDenominatorfrom 8 to 16 (as proposed in this document) - Only Decreasing

SprintLengthto 16 blocks from 64 - Only increasing

BaseFeeChangeDenominatorfrom 8 to 16 - Other solution (please specify in the comments)

- None of the above

Overview

In this thread, we want to invite the community to discuss the proposed changes in the Polygon PoS chain aiming to provide better UX by addressing two major concerns - a) reorgs and b) gas spikes during high demand.

It is possible for reorganizations to occur in the chain with the consensus mechanism bor uses. Although there has been a reduction in the frequency of reorgs with the introduction of the BDN being used by validators, it is still prevalent and a cause for concern among DApp developers. One of the ways identified to mitigate the issue is to reduce the sprint length from the current 64 blocks to 16 blocks.

Polygon PoS chain saw a major update in the beginning of 2022 when EIP-1559 was rolled out on Mainnet as part of the London Hardfork which changed the on-chain gas price dynamics. Although it works well majority of the time, during high demand, we have seen huge gas spikes due to rapid increase in the base fee. We propose to smoothen the change in base fee by changing the BaseFeeChangeDenominator from 8 to 16.

Chain Reorganisations

A chain reorganisation (or “reorg”) takes place when a validator node receives blocks that are part of a new “longer” or “higher” version of the chain (we refer to this as the chain with the highest difficulty, or the “canonical” chain). The validator node will then ignore/deactivate blocks in its old highest chain in favour of the blocks that build the new highest chain.

The impact on applications (and ultimately users) is related to transaction finality as reorgs disrupt an application’s ability to be confident that their transactions are part of the canonical version of the chain. To get around this, applications need to wait for additional block confirmations.

Why are there reorgs in the first place? Because the consensus mechanism used in PoS Mainnet is probabilistic - meaning that finality is eventual and typically based on the number of confirmations layered on top of the block holding your transaction.

In a typical industry example, a transaction on the Bitcoin Network is typically considered “final” after about 6-12 block confirmations. On PoS we suggest that applications wait approximately 50 or more blocks to feel safe about transaction finality - this can lead to undesirable user experiences unless the application teams can build in some fancy workarounds.

Proposed Solution

Based on the above, we propose a decrease in the sprint length from 64 to 16 blocks. This means that a block producer produces blocks continuously for much lower time as compared with the current 128 sec. This will help a great deal in reducing the frequency and depth of reorgs. This doesn’t affect the total time/no of blocks a validator is producing blocks over a span and hence there would be no change in the rewards overall.

Background Work

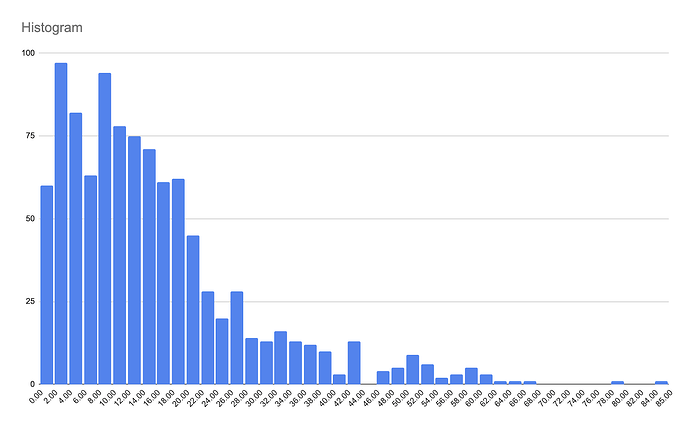

We observed that most of the reorgs have very low depth (as is clear from the below graph created on a sample set of blocks/reorgs).

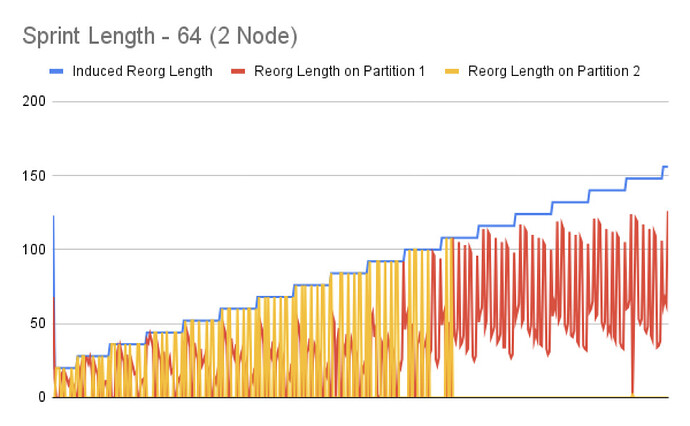

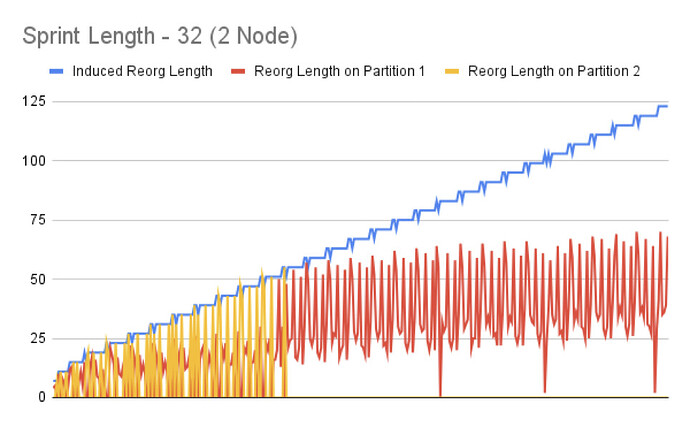

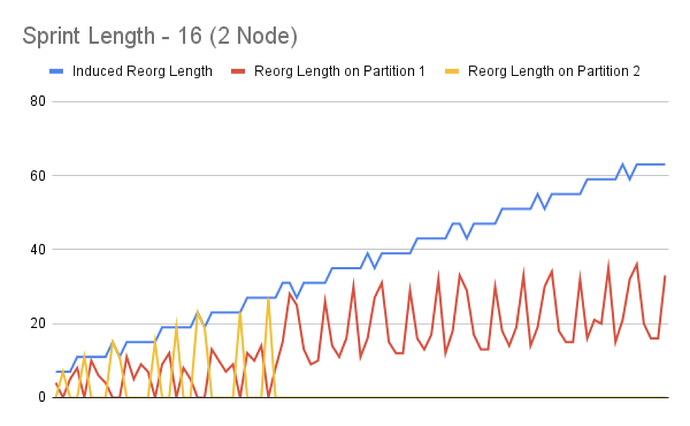

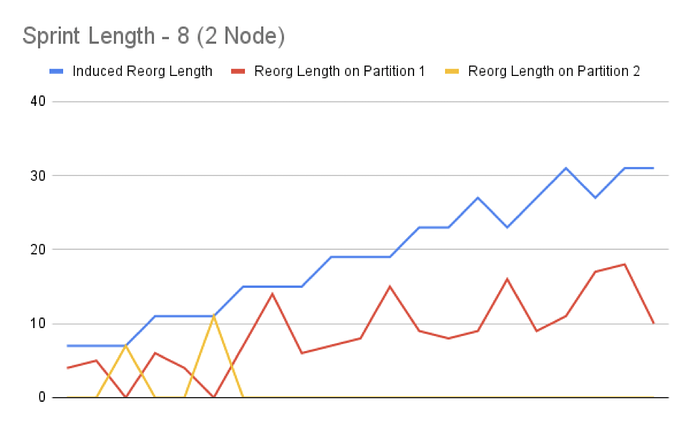

We conducted experiments by creating multiple devnets consisting of 7 nodes. In each devnet, we set different sprint lengths (8, 16, 32, 64) and induced reorgs in 1 node and 2 nodes programatically.

In 1 node partition, we experimented by disconnecting once by primary node and once by secondary node. We induced reorg length of 4x(sprint size) and found out that the maximum reorg length was around 1x(sprint length).

In 2 node partition, we experimented by disconnecting once by (primary node, secondary), once by (secondary node, tertiary). We induced reorg length of 4x(sprint size) and found out that the maximum reorg length was around 2x(sprint length).

As the sprint length decreased, the reorg depth also decreased. This relation is made clear in the below charts.

Gas Spikes

To know more about the implementation of EIP-1559 and its effects on Polygon, you can refer to this forum thread.

To summarize, the main reasons for gas spikes happening during high demand are:

- Exponential Gas pricing due to EIP-1559 and the current basefee: On Ethereum, a series of constant full blocks will increase the gas price by a factor of 10 every ~20 blocks (~4.3 min on average). But on the PoS chain, since the block time is 2 secs, the baseFee goes 10x in just 40 seconds. A user’s transaction becomes ineligible due to high

gasPriceright after the particular block it was fired in. As the network utilization goes high, thebaseFeestarts climbing and the transaction is stuck in pending till thebaseFeecomes back down and the transaction becomes eligible. Also, gas SPIKES faster than applications (wallets, etc) can keep up / make predictions. This results in poor user experience during peak times. - Bad contracts: Occasionally poor design choices by dapp developers will unintentionally create a DDoS type effect on the network and litter the transaction mempool with tons of transactions driving up demand for blockspace resulting in higher gas prices for everyone.

Proposed Solution

Hardfork

We would like to propose increasing the BaseFeeChangeDenominator from the current value of 8 to 16. This will smoothen the increase(/decrease) in baseFee when the gas used in blocks is higher(/lower) than the target gas limit. After this change, the rate of change will decrease to 6.25% (100/16) as compared to the current 12.5% (100/8).

Background Work - Simulations

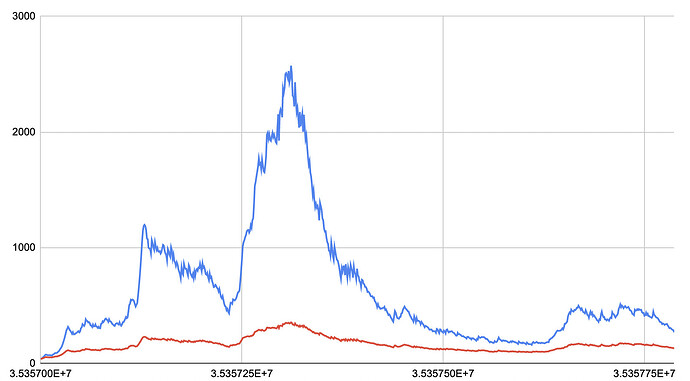

We ran several simulations and found the following:

- Blue line: recent PoS gas price spike

- Red line: ‘back fit’ calc with 2x decrease in rate of change

- Key point: rate is exponential over time (blocks) so small change in rate is magnified

Experiments using Load Bot

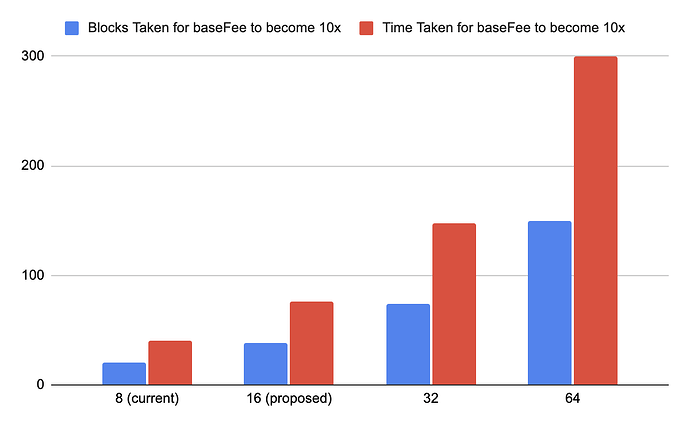

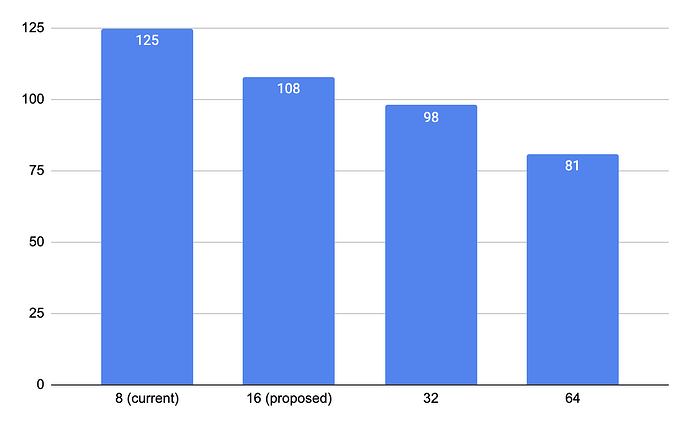

We ran the load bot on devnets we created with different BaseFeeChangeDenominator values - 8 (curent), 16 (proposed), 32 and 64. There are two sets of results important to note:

- Blocks/Time taken for baseFee to become 10x (the higher the better)

- No of Blocks for 100k txs to get mined into blocks (the lower the better)

This time, we are proposing the change in BaseFeeChangeDenominator from 8 to 16.

Next Steps

We invite the community to participate in the discussion and the soft consensus vote attached to this post. In order to signal preference, each validator team present on Discourse is able to cast one vote, using their designated account.

We will be conducting a Town Hall on Monday 12th, in direct correlation to the proposed changes in this post. This meeting will serve as a place where the bright minds of the community come together and evaluate decisions, share suggestions and clarify any queries that may be present in relation to the proposed changes.

We look forward to seeing and hearing from you there, the meeting invite will be sent out via email. The session will be recorded for those who cant attend.

Note: The scheduling of a potential hardfork will be communicated using regular channels, i.e., Discord, TG, Email, Forum.