Could any of the Polygon PoS protocol engineers or perhaps large validators comment on what exactly happened recently with that reorg? Particularly in light of the announced hard fork last month where one of the key goals was to address chain reorg risk.

I’m not involved in the polygon team at all, but just leave some comments.

It just seems like a terrible luck. Polygon pos chain is relying on probabilistic consensus.

As you mentioned about the hard fork, sprint length was decreased from 64 to 16 which means that the probability of long reorg is decreased that much.

However, that’s just probability and still the chain can produce this kind of long reorg even with very little probability.

This kind of bad finality is definitely a problem, and it needs some investigation and fix for this.

https://twitter.com/mihailobjelic/status/1628926312076423170?s=12&t=Ygp6XgxwDMCS7b3MYfH6Pw

The serious reorg was not just bad luck but seems to be triggered by some trivial bug which is fixed right after that.

Moreover, the team is trying to replace bor/heimdall with single client for eliminating the possibility that I mentioned above.

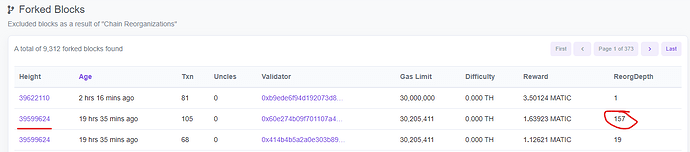

The real question is did the reorg cause the outage, or did the outage cause the reorg? Lots of nodes were hit with a bad merkle root error, including some of ours. I’m of the opinion that this led to the outage, which led to the reorgs as validators stopped and restarted simultaneously. Low conviction opinion though - unfortunately I haven’t had a lot of time to go digging around in logs ![]()

Yep. Would be good if some of the larger validators in the ecosystem could comment here once they’ve had time to diagnose the issue

The tl;dr is that - yes - the partial outage ‘caused’ the long re-org. Many nodes were knocked offline by the bug and this created local areas where block producers could not communicate. We will have more formal comms soon as well as discussion about possible hardforks, one of which will address the bug.

Thanks for the update <3

Re: hard fork… would that be to address the depth of the reorg? I’m assuming a fork wouldn’t be necessary to fix the merkle root error (or, more specifically, its propagation through the p2p layer) but I could be wrong.

If that is the case, will there be a bor update as well? And if so, any idea when? ![]()

Any update or news on this front for the PoS chain reorg issues?

Don’t hold your breath.

Hey @randomishwalk, @adamb, @Thogard

On the reorg, see the rational in PIP-10 as it has some details on this.